|

Software Development Magazine - Project Management, Programming, Software Testing |

|

Scrum Expert - Articles, tools, videos, news and other resources on Agile, Scrum and Kanban |

Click here to view the complete list of archived articles

This article was originally published in the Summer 2008 issue of Methods & Tools

Acceptance TDD Explained - Part 4

Lasse Koskela

Owned by the customer

Acceptance tests should be owned by the customer because their main purpose is to specify acceptance criteria for the user story, and it’s the customer—the business expert—who is best positioned to spell out those criteria. This also leads to the customer being the one who should ideally be writing the acceptance tests.

Having the customer write the acceptance tests helps us avoid a common problem with acceptance tests written by developers: Developers often fall into the pit of specifying technical aspects of the implementation rather than specifying the feature itself. And, after all, acceptance tests are largely a specification of functionality rather than tests for technical details (although sometimes they’re that, too).

Written together

Even though the customer should be the one who owns the acceptance tests, they don’t need to be the only one to write them. Especially when we’re new to user stories and acceptance tests, it is important to provide help and support so that nobody ends up isolating themselves from the process due to lack of understanding and, thus, being uncomfortable with the tools and techniques. By writing tests together, we can encourage the communication that inevitably happens when the customer and developer work together to specify the acceptance criteria for a story.

With the customer in their role as domain expert, the developer in the role of a technical expert, and the tester in a role that combines a bit of both, we’ve got everything covered. Of course, there are times when the customer will write stories and acceptance tests by themselves—perhaps because they were having a meeting offsite or because they didn’t have time for a discussion about the stories and their accompanying tests.

The same goes for a developer or tester who occasionally has to write acceptance tests without access to the customer. On these occasions, we’ll have to make sure that the necessary conversation happens at some point. It’s not the end of the world if a story goes into the backlog with a test that’s not ideal. We’ll notice the problem and deal with it eventually. That’s the beauty of a simple requirement format like user stories!

Another essential property for good acceptance tests ties in closely with the customer being the one who’s writing the tests: the focus and perspective from which the tests are written.

Focus on the what, not the how

One of the key characteristics that make user stories so fitting for delivering value early and often is that they focus on describing the source of value to the customer instead of the mechanics of how that value is delivered. User stories strive to convey the needs and wants—the what and why—and give the implementation—the how—little attention. In the vast majority of cases, the customer doesn’t care how the business value is derived. Well, they shouldn’t. Part of the reason many customers like to dictate the how is our lousy track record as an industry. It’s time to change that flawed perception by showing that we can deliver what the customer wants as long as we get constant feedback on how we’re doing.

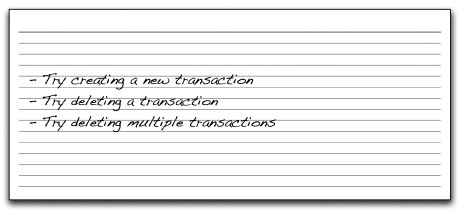

Let’s look at an example to better illustrate this difference between what and why and how. Figure 3 shows an example of acceptance tests that go into too much detail about the solution rather than focusing on the problem - the customer’s need and requirement. All three tests shown on the card address the user interface - effectively suggesting an implementation, which isn’t what we want. While doing that, they’re also hiding the real information - what are we actually testing here?" - behind the technology.

Figure 3. Acceptance test focusing too much on the implementation

Instead, we should try to formulate our tests in terms of the problem and leave the solution up to the developers and the customer to decide and discuss at a later time when we’re implementing the tests and the story itself. Figure 4 illustrates a possible rewrite of the tests in figure 3 in a way that preserves the valuable information and omits the unnecessary details, which only clutter our intent.

Figure 4. Trimmed-down version of the tests from figure 3

Notice how, by reading these three lines, a developer is just as capable of figuring out what to test as they would be by reading the more solution-oriented tests from figure 3. Given these two alternatives, which would you consider easier to understand and parse? The volume and focus of the words we choose to write our tests with have a big effect on the effectiveness of our tests as a tool. We shouldn’t neglect that fact.

There’s more to words, though, than just volume and focus. We also have to watch for our language.

|

Methods & Tools Testmatick.com Software Testing Magazine The Scrum Expert |