|

Software Development Magazine - Project Management, Programming, Software Testing |

|

Scrum Expert - Articles, tools, videos, news and other resources on Agile, Scrum and Kanban |

Click here to view the complete list of archived articles

This article was originally published in the Summer 2010 issue of Methods & Tools

A High Volume Software Product Line

Peter Bell, SystemsForge,http://systemsforge.com/

Software product lines are designed to promote the efficient reuse of artifacts between projects. Domain specific modeling allows for the faster development of custom applications within a particular domain. Each provides powerful techniques for developing applications more efficiently, but together they provide a particularly effective toolkit for the faster development of custom applications. In this article I'll review an internal software product line that I have been involved with developing for a number of years, designed to rapidly build semi-custom web applications.

The article starts off with a review of our experiences and design choices. It then continues with the conclusions we have drawn from our experiences which hopefully will be of some use to anyone considering leveraging domain specific languages and/or software product line engineering on their projects to allow them to deliver applications more quickly and cost effectively. The experience report assumes a certain familiarity with domain specific modeling concepts, but if any of the terms (projectional editing, internal vs. external domain specific languages, etc) don't make sense - don't worry. They are explained along with heuristics for working with them in the second half of the article.

The Problem Space

We have found it useful to break down website development projects into three broad categories. Generally if someone has less than $5,000 to spend on an entire website including design, content and programming, we advise them to use a standard piece of software such as Wordpress for content management or e-commerce solution Yahoo! stores for e-commerce. They may want something a little more custom, but they simple don't have the budget to get it built. At the other end of the spectrum, if someone has over $50,000 to spend on a project, (web) domain specific frameworks like Grails, Rails or Django will definitely speed up development, but you don't have to engineer all of the costs out of the project using a software product line based approach. If it takes half a day instead of ten minutes to set up a base project along with a build script and a Continuous Integration server, that's not generally a problem. In the middle, projects that cost from $5,000 to $50,000 need to leverage standard functionality wherever possible, but in a way that allows for rich customization of the parts of the application that the client cares about.

We generally find that any given client will focus on customizing 10-15% of their application functionality. Some clients really care about how their discounting system works. Others are really focused on the details of their registration process or the way that clients can navigate their products or the process of signing up for events. So the goal for these "semi-custom" applications is to be able to "generate everything, customize anything" so we can deliver an acceptable solution based on industry best practices very quickly but can then work with the client to customize the parts of the application that they really care about, extending or adapting the generated functionality.

At SystemsForge, we specialize in developing these semi-custom web applications. We provide a white labeled service for ad agencies. They sell the sites, handle the project management, design the look and feel and create the HTML/CSS. We do the programming to deliver the functionality required. Most of the projects we deliver have programming costs of between $1,200 and $5,000 so we have to deliver them very efficiently. Around twenty percent of the projects require more custom functionality and have programming costs of between $5,000 and $35,000 so we need to have a process that blends efficiency with extensibility.

Recent sample projects include simple content management systems, e-commerce stores for business to business and business to consumer clients, event calendars, newsletter systems, scheduling solutions, a ticketing system for a building contractor and an application for sharing information about sitting Shiva (mourning) for a deceased person. On a superficial level the projects are fairly different, but over the years we have found a number of ways of developing the applications more efficiently by identifying commonalities.

Approaches to the Problem

Originally, we started off in 1996 by hand coding websites. Fairly quickly we noticed a substantial duplication of effort between projects, so for a short period of time we utilized informal cut and paste reuse. Pretty quickly we ran into problems with different versions of various assets and issues with finding a particular piece of functionality for reuse ("which project has the latest calendar month to view display code?").

The next quick and dirty solution was to create a project skeleton. At first we created a simple skeleton to allow us to kick off projects more quickly. We then added the latest and greatest version of our various code snippets to the project and used manual negative variability - manually deleting any files we didn't need for a particular project. Before long, the skeleton ended up looking more like a bloated corpse with far too much functionality that had been poorly designed! We tried briefly to abstract optional functionality into a separate directory, but before long we realized that we needed a more scalable solution for the reuse of code.

The first serious attempt at formal code reuse took a component based approach. We built a framework for basic content management and then added a number of modules for common functionality like e-commerce, email newsletters and event registration. Each module supported all of the functionality required by all of the clients. They used a set of configuration parameters to configure the functionality for any given project. The configuration parameters were edited using a web based "site builder" wizard and stored in a database. This worked well for the first few clients.

As we did more projects we found that each client wanted different customizations. By the time we got to fifty projects using the module based approach - each with their own distinct customization requirements - the configuration options and the underlying code for the components had become unmaintainable.

The next step in the evolution was a rather clumsy concatenation based code generator. You would fill out forms to enter metadata describing the domain and those would drive a generator which would generate the appropriate functionality by concatenating strings to build the runtime scripts. It had the benefit of moving some of the configuration from runtime to generation time, but the concatenating scripts were difficult to maintain because you couldn't easily see the kind of code that the concatenators would generate just by looking at them.

At this point we took some time off to research methodologies for reuse, learning about best practices in code generation, domain specific modeling and software product lines. We were then able to revisit the problem space using a more sophisticated set of approaches to the problem.

A Domain Specific Framework

From the many applications we had built by this time, we had a very good idea of what we wanted our languages to look like for describing any given application. We described most applications in terms of the model (business objects with properties, relationships, validation rules, administrative rules and default values), the controllers (controllers with actions that allowed us to describe lists, detail screens, import and export functions and a number of other common capabilities), the views (which allowed us again to declaratively describe at least a first cut of the functionality required for most screens) and custom data types (a cross cutting concern that allowed us to set sensible default validation rules, generate rich admin interfaces and decorate the output of the object properties with display rules, so we could - for example - say that a field was a USPhoneNumber data type and it would validate that it was 10 numeric digits, allow us to edit it using three text boxes and would display the number as (xxx) xxx-xxxx even though it was stored as a single 10 digit string). We looked carefully at a number of web frameworks available in various languages, but at that time they were not sufficiently mature to solve the range of problems we were looking to handle and the effort to implement a facade between the models we wanted to create in our domain specific languages (DSLs) and the inputs required by the frameworks available looked like it would be greater than just writing an in-house framework to interpret the models the way we wanted.

We also thought for some time about what kind of concrete syntax to use for the DSLs. Rails was just starting to become popular so we looked seriously at internal DSLs within our host language (at the time ColdFusion - the first commercially successful dynamically typed scripting language available on the JVM - providing some of the benefits that languages like Groovy and JRuby now offer - but in 2002). However, we were concerned about maintainability and what would happen if we wanted to evolve the grammar of our DSLs so we opted for external DSLs. We looked briefly at leveraging tooling like openArchitectureWare's Xtext (for textual DSLs) or MetaCase MetaEdit+ (primarily for visual DSLs). We also considered writing a custom parser using ANTLR, or even creating a forms-based interface which would persist the models to a database which was what we had done in the past. In the end we decided that the quickest win for us would be to start by using XML as a concrete syntax for the DSLs as it was very easy to parse, transform and emit and we could easily create a more human readable representation of the model for viewing by business users and could eventually add a forms based interface for generating the XML if we needed to make the DSLs end user writable. In short, XML was a simple starting point, a great transport mechanism and made it easy for us to implement the various projections of the language that we needed for different stakeholders.

The domain specific framework was a great start. It used runtime interpretation of XML files to effectively "pretend" that a bunch of class files existed for describing the domain objects, a service based interface to the model and the controller functionality. They were also used by dynamic runtime templates to create a first cut of most of the view screens along with a passive generation option for screens that needed extensive customization. It allowed for easy run-time extension by just dropping in real class files with actual methods to overload or support those that were described by the XML files, and despite doing so much at runtime the system was still very performant and easy to work with. In addition, the entire framework was realized in under 6,000 lines of code, so it was very easy to maintain.

Introducing the Software Product Line

The problem with the domain specific framework was that if we wanted to build common functionality like registration or a simple product catalog or shopping cart, it still felt very repetitive. The good news was that we could describe the functionality very concisely using the DSLs. The bad news was that even with concise languages it took a while to describe a rich, complex e-commerce system with products, product categories, product attributes, discounts, gift certificates and the many other domain objects required to fully describe such a system. We really needed a more efficient way to reuse our models while still having the ability to customize them. Here was where the software product line came in.

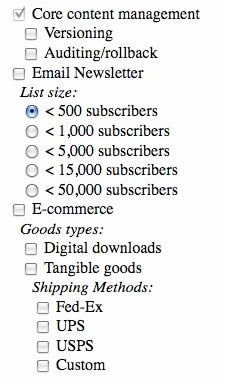

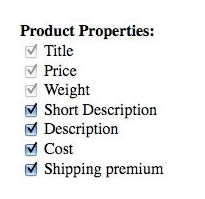

While the DSLs gave us unbounded variability, allowing us to describe any collection of domain objects, controllers and views, we also needed some way to implement bounded variability - a feature model allowing us to quickly and easily configure a first cut of the application which we could then customize from there. In the end we implemented this using a website with a forms-based interface. The system allowed a non-technical user to enter the name of a new project, to select a set of common features such as an events calendar, a product catalog, a shopping cart and a email newsletter system, and then for each to select common sub-features using a simple feature tree. The feature tree supported essential features, optional features and alternate features (with both single and multiple select capabilities). Each feature then added one or more essential or optional statements to the model. So, for example, adding a "product catalog" feature would add a product domain object with an essential title property (there had to be a title property in the generated application). Adding that feature also added some optional statements such as properties for the product object that might be useful but would not always be required. For example, short description, detailed description and price would be optional properties (unless you added a "cart" feature in which case price would become an essential property).

Figure 1: A simplified example of a feature modeling screen

The next step then was to configure the optional properties, deciding which of them to include or exclude for this particular project.

Figure 2: A simplified example of a configuration screen

The configuration information was then used to passively generate a first cut of all of the necessary XML files to describe the solution selected. We could then go in and manually edit the XML files to make changes like adding completely custom properties to the domain objects or changing the flow of the controller actions. At first we envisioned support for active regeneration of the XML files - storing the changes to the files separately and applying conditional model transforms to allow us to do things like automatically add new domain concepts or properties to applications after they had been developed and deployed. Apart from the technical challenges of the problem, we fairly quickly realized that our clients did not want their applications to change how they worked as part of an automated upgrade cycle so we decided to treat the software product line piece as simply a jump start for creating the models. This still left us with the flexibility to upgrade the implementation of our models, so at least for the simpler applications we could make substantial changes to the implementation framework without having to rework the applications manually.

Latest Focus

Recently, we have decided to make some substantial changes to the software product line. The overall structure of the system is working well, but details of the implementation are starting to become problematic. Firstly we're refactoring to use a third party web framework - Grails. While it doesn't map perfectly to all of our DSLs, we have now reached the point where Grails is sufficiently mature and the complexity of implementing some of the domain concepts we would like to express in our own system is sufficiently high that it makes more sense to implement a thin productivity layer using our software product line than to try to maintain a deeper stack in-house.

We have also continued to research DSL evolution. One of the big unsolved problems is that as your understanding of the domain grows, you often want to refactor the grammar of your DSLs. Sometimes those changes will not be backwards compatible so eventually you need to have an approach to handling DSL evolution - whether it be versioning or automated model migrations.

Finally we have also been investigating various test frameworks for describing acceptance tests for the applications so we can automatically generate acceptance tests from our requirements. We have been working on the DSLs we use to express our requirements (stories and scenarios) and the goal is to be able to generate acceptance tests directly from the scenarios in a way that is similar to what you see with the Behavior Driven Design frameworks like rspec, GSpec and JBehave.

What We're Learnt

In this section of the article I want to review some of the important concepts for working with domain specific modeling and software product lines and our experiences when working with them.

Internal vs External DSLs

In the last few years there has been a lot of discussion about internal DSLs in languages like Ruby and Groovy - and more recently in languages like Scala, Boo and Clojure. Of course, this is nothing new. Most Lisp programs are built up using DSLs defined using Macros, but the concept of language oriented programming has been gaining wider attention recently.

Internal DSLs are domain specific languages which are implemented within an existing general purpose programming language (gppl). You add domain concepts to your program and your DSL models are part of your application code and are parsed directly by the gppl parser. Internal DSLs are relatively easy to implement. Often you'll create a fluent interface using a builder pattern on top of your domain model API, so you can make statements like "new meeting from: 4.pm, to:6.pm" which are easier for business users to read and validate than traditional API calls. Internal DSLs also provide access to native language constructs for mathematical operations and control flow constructs, so if you do find a need to express conditionals, loops or mathematical operations, you don't have to write a parser to support that - it's available for free from the host language.

External DSLs require a little more work to implement. You might create a simple DSL that is implemented using XML, or put a little more effort into creating a custom textual DSL that is parsed either using a parser generated by ANTLR or perhaps using XText and XPand - now part of the Eclipse Modeling Framework. You could also create a visual external DSL using tools like those provided by the Eclipse Modeling Framework or MetaCase's MetaEdit+. External DSLs give you more control over your models. Users cannot just add any arbitrary constructs from a gppl. This is particularly useful for DSL evolution as with external DSLs it can be practical to apply model transformations to your existing model to upgrade existing models to work with a new grammar. That isn't usually practical with internal DSLs.

End User Readable vs Writable

A lot of discussion concerning DSLs is often about the practicalities of end user programming and creating DSLs that business users can write. What is often forgotten is that depending on the use case you can often get most of the benefits of DSLs by creating languages that end users can read and validate - even if they can't write them.

For example, most internal DSLs would be difficult for end users to write. They have to follow specific syntactic conventions and often the error messages thrown are not very helpful in identifying what really went wrong. In our case we did end up creating end user writable DSLs using a form based interface (which along with visual modeling tools are often the easiest input mechanisms for casual modelers), but it's worth considering whether you can get sufficient value just by creating DSLs that end users can read so they can validate that the program states what they were trying to express about their domain. Often end user readability provides most of the benefits of a DSL for much less effort than creating a truly end user writable solution.

Editing Options

There are lots of ways of editing DSLs. You could use an XML concrete syntax and some kind of XML editor, a visual modeling tool, a forms based UI, a simple text editor or using XText you could even generate a plugin for Eclipse to allow your modelers to use an IDE. Of course, with an internal DSL, your modelers will probably just use their IDE or text editor of choice for their regular general purpose programming language editing.

It's important to think carefully about the kind of people who have to be able to write the models and the support they'll need in terms of editor features, semantic checking and meaningful error messages. Creating an editor that is usable by domain experts is usually more complex than creating one that a programmer could use. One thing to consider is the possibility of pairing domain experts with developers for writing DSL models. That way the programmer can help with the structuring of the models and deal with less sophisticated tooling while allowing the domain expert to focus on describing the business rules and validating that the models properly describe what they were trying to communicate.

Projectional Editing

Recently there has been a fair bit of work in the field of projectional editors. In 2005, Martin Fowler coined the term "language workbench" to describe an IDE designed specifically to support language oriented programming for the development of DSLs. Both Intentional Software and JetBrains now offer editors that try to fulfill this promise. I would argue that there is still work to be done before it's clear whether this will become a mainstream technology, but I would thoroughly recommend downloading and trying out JetBrains MPS ("Meta Programming System") to get an idea of the potential of this approach.

At a more pragmatic and immediate level, it's worth remembering that you are not limited to just a single concrete syntax for displaying and editing your languages. It would be quite possible to support a fluent interface to allow developers to quickly implement calls against your framework/domain model, to provide a forms based interface to allow casual domain experts to add their own rules and to provide some kind of graphical representation of your models to allow business stakeholders to get a high level view of all of the models within your system.

Bounded vs Unbounded DSLs

Another important distinction is between bounded and unbounded DSLs. Unbounded DSLs are used to deal with solutions where there is a theoretically infinite solution space. A good example would be a language for describing domain objects and their properties. While there are a relatively small number of domain objects that come up on a regular basis, such a language is capable of describing an unlimited number of potential models.

Bounded DSLs are designed for configuration of systems with a limited number of possible configuration states. If you find that you need a bounded configuration DSL, make sure to research existing solutions for feature modeling. There are well proven patterns for describing configuration DSLs and it is worth reviewing them to help with the design of your specific configuration DSL(s).

Runtime Interpretation vs Code Generation

While we started off by thinking that we were in the code generation business, we realized pretty quickly that actually we were in the domain specific modeling business. There is nothing wrong with generating code. It is one of the many ways of implementing DSLs and has strengths and weaknesses. There are a number of benefits to code generation. From an intellectual property perspective it allows you to deliver to clients a specific solution for their needs rather than giving them a copy of an entire system for delivering a whole class of possible solutions. It also makes the runtime code easier for less experienced programmers to understand as the generated code will often be simpler than a runtime framework that will interpret your models. If you have a proven performance issue with a runtime framework, pre-compiling your models into code can also improve performance if that really is the bottleneck.

The main thing to ensure with code generation is that wherever possible you use active code generation so that you can regenerate the code as your models change without losing any custom code. Generally this is done by putting any custom code into different files than the generated code - often using some combination of subclassing, AOP, event driven programming or (where your language supports it) include files, partial classes and/or mixins. If you have to mix generated and custom code within a single file, protected blocks with some kind of unique multi-character delimiter between editable and generated code is a possible solution, but it's generally better to keep the generated and hand written code in separate files wherever possible.

Avoiding the Customization Cliff

Another important issue to look out for is what Krzysztof Czarnecki termed the "Customization Cliff". The ideal solution should make it very easy to do simple things, and just a little harder to do more complex things. All too often you will see a software product line which supports easy point and click selection of features, but where making a change to a feature requires writing C++ code! You want to have lots of small steps each of which give you a little more control rather than just dropping off the edge of a cliff when you hit the limitations of the expressiveness of your DSLs.

DSL evolution

Finally, think about how your DSLs may evolve over time. Generally there are four stages that a DSL will go through. To start with you might try to avoid having to change the DSL. You simply do what you can with the expressiveness that it provides and then write custom code as necessary for any special cases.

After a while, the custom code for the special cases gets repetitive and the pressure grows to allow your DSL to become more expressive. You decide to allow changes to the grammar, but only in a way that will preserve backward compatibility. That works for so long, but eventually you often find yourself needing to make a change that will not be backwards compatible. A common approach to that solution is to start to version your language, with all models having a version and automatically being run against the appropriate version of your DSL.

That works for a while, but eventually you run into issues with old models needing new language features or with the overhead of having to maintain multiple versions of your DSLs. If you have a large number of models, at this point it's worth looking at the possibility of applying model transformations to automatically upgrade old models to work with the new version of your language. Not all grammatical changes to a language allow for automated transformations, but at very least you should be able to automate some of the model transformations and provide an efficient UI for human intervention in the case of models that need to be upgraded by hand. Model transformation is typically easier to apply to external DSLs, but if you find yourself with a lot of internal DSL statements and need to apply model transforms, consider the possibility of writing a script that will walk the in-memory runtime model and generate a set of models in a new external DSL that fully describes the current state of the model. In that way you can refactor to external DSLs and then apply your model transformations to them more easily.

Conclusions

This problem domain has ended up being a really interesting space for examining best practices for developing software more efficiently. On the one hand, the very constrained price points we deal with mean that we have to get every last bit of efficiency out of our software product line. On the other hand, continued investment in the software product line over a number of years has allowed us to investigate a wide range of approaches and to get some real world experience of the relative benefits of different approaches for different classes of problems.

Related Methods & Tools articles

- Introduction to the Emerging Practice of Software Product Line Development

- Making an Incremental Transition to Software Product Line Practice

- Software Product Line Engineering with Feature Models

Back to the archive list

|

Methods & Tools Software Testing Magazine The Scrum Expert |