|

Software Development Magazine - Project Management, Programming, Software Testing |

|

Scrum Expert - Articles, tools, videos, news and other resources on Agile, Scrum and Kanban |

Nitrate: an Open Source Test Case Management System

Alexander Todorov, Mr. Senko Ltd, http://MrSenko.com

Nitrate is an open source test plan, test run and test case management tool that is written with Python and Django. It was initially created to replace Testopia, a test case management extension for Bugzilla. Nitrate has a lot of great features, such as Bugzilla and JIRA integration, QPID messaging, fast test plan and runs search, powerful access control for each plan, run and case, and XML-RPC APIs.

Web Site: https://github.com/Nitrate/Nitrate

Version tested: 3.8.18.x

System requirements: Python 2.7, MySQL, Django

License & Pricing: GPL 2.0, subscriptions from $200/mo

Support: GitHub issues tracker in upstream project, commercial support from http://MrSenko.com

User tutorial: http://nitrate-mrsenko.readthedocs.io/en/latest/tutorial.html

Demo server: available upon request

Background

The Nitrate test case management system (TCMS) has been internally developed by Red Hat and released to GitHub in late 2014. It has enjoyed moderate development since then and frankly appeared to have been abandoned until recently. The project is currently experiencing a revival with new developers working on it. Current contributions are coming from three organizations and mostly deal with cleaning up technical debt and minor bug fixes.

Nitrate has been developed as a substitute for Testopia and reuses an important part of its database schema. Data migration between the two should be relatively straight forward but I don't have first hand experience with that.

Why do I need a test case management system

“If it is not in the TCMS then we don't do it”!

The above motto has been the paradigm driving the development of Nitrate. The system aims to serve as a canonical source of information about testing that is useful to managers and used on a daily basis by people involved with software testing. I have used Nitrate to create and document 100+ test plans, including over 1000 new test cases, around 2000 executions across different builds and more than 20000 individual test case executions over the last few years.

Nitrate is the central hub to provide you with information about your testing activities. This is the place to assign individual tasks to testers in your team as well as to produce reports and analyze your testing processes.

You need a TCMS system so you can collect information about testing in your organization. This tool will answer questions like:

- Is testing of the product complete?

- Are we ready to ship?

- How did this bug get into production?

- How are we testing a particular product feature?

Having all of this information is the basis on which your QA team can start delivering continuous improvements to the testing process!

Nitrate doesn't care about what types of testing it is used for. You can document automated or manual cases, integration or unit tests, performance tests, etc. All of them are distinguished by categories which are defined by the user and don't have special meaning within the system. This means that it is possible to model any kind of testing process within Nitrate.

Installation

Nitrate is a Django-based application and as such doesn't have many requirements for its installation. The recommended way is to install it inside a Python virtualenv and serve it either with Apache or Gunicorn (plus Nginx). The documentation also describes how to create a Docker image for Nitrate and to host this on Google Cloud Engine.

Currently Nitrate requires a MySQL or a MariaDB database for historical reasons. However there is an ongoing effort to make it completely independent of any database engine. I have environments which use SQLite for development and PostgreSQL for production.

Terminology

Nitrate uses the following terminology:

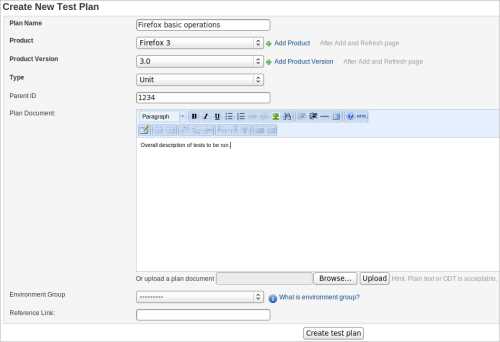

Test Plan – a high level document that should not include specific testing steps. Instead a Test Plan should identify which features of a product will be tested. A Test Plan contains a Document section and then sections with Test Cases, execution history and others. You may use an IEEE 829 formatted test plan for the Document section but this is just a recommendation and depends on your organization.

(click on figure to enlarge)

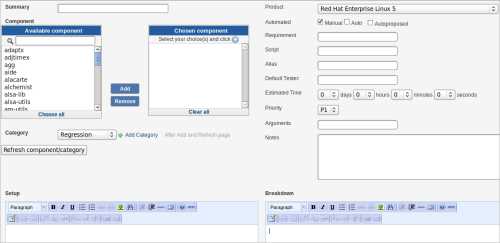

Test Case – outlines a set of conditions, or variables, under which the tester verifies whether an application or a software system meets particular criteria. In Nitrate, a Test Case represents an item of work, something which needs to be tested and the results of that documented. A Test Case is mostly free form text with added fields like category, priority, component, etc. You may use Given-When-Then grammar or 1-2-3 steps or anything else that works for you.

(click on figure to enlarge)

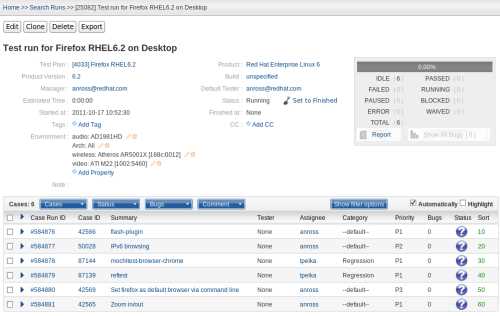

Test Run – Test Runs are created for a specific Test Plan and product build. Only Test Cases with a status of CONFIRMED will be added to the Test Run. A Test Run can be assigned to any user in Nitrate. Individual test cases inside a Test Run can be tested and commented on by other users as well.

(click on figure to enlarge)

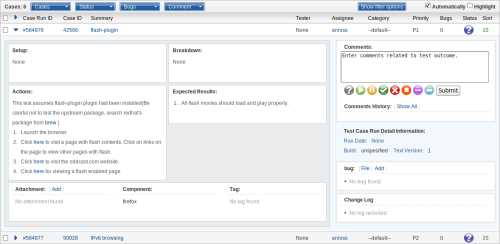

Test Case Run – represents a particular execution of a Test Case. Each Test Case Run is tied to a Test Case (what has been tested), has a build and product (which software version was tested) and a status (whether is PASSED, FAILED or something else).

(click on figure to enlarge)

Learning curve

Nitrate is relatively easy to learn. Inexperienced users will need 30 to 60 minutes to learn how to work with Nitrate. Once I introduced Nitrate to a group of students that were training to become QA engineers and they were able to document test plans, test cases and mark test results by the end of the class.

First you create a Test Plan and start adding Test Cases to it. After you are done, you may ask another team member to review your cases. Peers can comment on test cases or set their status to CONFIRMED when they are satisfied with how the case looks.

Test execution and reporting starts with the creation of a Test Run for a particular Test Plan. This test run includes a list of cases to be tested and links to a particular build of the product. A team leader or manager can assign test runs to other team members for execution.

When the actual testing has been performed, either manually or automatically, the test case entry inside the test run is marked as PASSED or FAILED and the tester moves on to the next item.

Some of the coolest features in Nitrate are:

- Everything assigned or managed by you is available in Nitrate's home page. This makes finding things very easy;

- WYSIWYG editor, TinyMCE at the moment, for test plan document and test case descriptions;

- Cloning: every test plan and test case can be cloned and later the clone edited. This is very helpful when you have to create a lot of identical artifacts quickly;

- When executing testing you can bulk update statuses and comments. This is particularly useful when working on large test plans, that include many test cases and you have an issue, failing or blocking a large portion of these cases. In this case simply select all of them and bulk update them;

- Automatically expand the next test case inside a test run. When you select this checkbox, it will automatically open the next test case when a test case status is updated. This is a great time saver when working with manual test cases and executing them in the same order in which they were documented;

- XML-RPC API for various integrations and automatic updates;

Automation and Integration

Nitrate comes with an XML-RPC interface and a Python package to talk to it. The client-side library consists of a high-level Python module which provides natural object interface, a low-level driver which allows to directly access Nitrate’s XML-RPC API and a command line interpreter that is useful for fast debugging and experimenting.

By itself Nitrate is not capable of scheduling or executing any automated test scripts. It is a place-holder for test scenarios (referred to Test Cases within Nitrate) and additional metadata like product, version, build, etc. It is possible to mark a particular scenario as automated, via a checkbox and also add the name of the automated script which implements the testing steps. There is also the possibility to add notes, tags, categories and priorities to test cases.

If you want to start automated test cases directly from Nitrate, you will have to create a test runner, which is specific to your infrastructure and use-case. For example if you are describing Selenium test cases, you may reference the Selenium script in the Script field of the test case and use tags to mark on which browsers the test case needs to be executed. Then your test runner needs to extract this information from Nitrate via its API and instruct your Selenium infrastructure, like SauceLabs, what to do. The pipeline described below uses a similar approach.

At the moment I am not aware of any such test runners being available to the open source community and the general public. However, I am willing to work with folks who would like to integrate Nitrate into their workflow and don't mind sharing the results on GitHub!

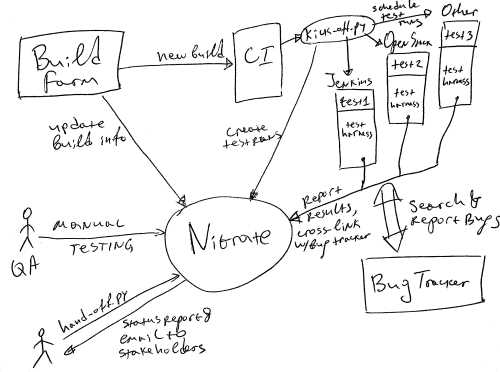

One particular environment I have been part of and where Nitrate is heavily used, it is integrated in the following pipeline:

- A build service prepares the new product build and sends notifications via a message queue. At the same time Nitrate is updated to include a record of the new build;

- A Jenkins server picks up the notification from the message queue and searches its job configurations for jobs related to that particular product. Then Jenkins kicks off automated test jobs, which are executed either on Jenkins nodes, OpenStack instances or external test lab when it comes to integration testing for complex products. Which test scripts and where they are executed is information that is stored in Nitrate. It is read via XML-RPC and augmented with the current build properties. There is a custom test runner responsible for this. While testing is running, Jenkins updates Nitrate and sets the status of these particular cases to RUNNING. Jenkins also adds comments with URLs to the system executing the test job (for inspection, log collection, debugging, etc);

- This environment uses a dedicated test harness which is aware of the entire infrastructure stack. Once a test is completed, regardless of its result (PASS, FAIL, etc), the test harness updates the result in Nitrate;

- Failures are reported to the bug tracker automatically and cross-linked with Nitrate as well.

Example of pipeline integrating Nitrate (click on figure to enlarge)

After all automated and manual cases have been completed, a report to the stakeholders is made. This report includes short status info, links to new or existing bugs and free notes by the QA manager responsible for the product. This is generated via a helper script extracting information from Nitrate and then sent via email.

Team velocity is also automatically calculated via a script and used for capacity planning. Again this is used mostly by the QA manager.

In this setup, the initial creation of test plans in Nitrate and their linking with Jenkins jobs are done manually. In this environment this is usually done when setting up testing for a new product. All subsequent test plans for that product are created as children of the master test plan and all of the test cases are extracted automatically by the test runner. Based on values from Nitrate, the Jenkins job knows how to schedule more jobs and kick off automated tests when necessary.

Reporting

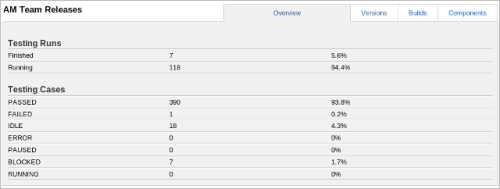

Nitrate has an integrated reporting functionality which is mostly used by managers. There are two main reports: overall and testing.

The overall report provides a high-level information about specific product: how many test cases were tested and what the results look like. It is possible to display the results per version, per build or per product component. Each detailed view shows stats about Plans, Cases and Runs and a Progress with passed/failed cases.

Product overview report (click on figure to enlarge)

Product builds report (click on figure to enlarge)

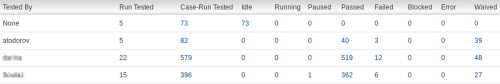

The testing report is used to extract more information about testing. It is possible to filter the results per product, version, build and execution dates. There are several available views showing testing status per tester, per case priority and plan tag. In addition views can be grouped by build and plan where applicable. Each of the testing report views displays stats about different test cases statuses (PASSED, FAILED, BLOCKED, IDLE, etc).

Per tester report (click on figure to enlarge)

Testing report per plan and build (click on figure to enlarge)

In case you need additional report you may build them yourselves using the XML-RPC API or contact Mr. Senko for help.

Conclusion

Nitrate is an open source test case management system that is easy to use. It is suitable for small and large teams alike and is aimed to be one of the building blocks of your testing infrastructure. The XML-RPC API allows you to integrate and automate Nitrate in pretty much any way you can imagine. Nitrate is the center piece of daily QA operations and is also useful to managers and stakeholders who need reports about testing progress and status. Because Nitrate is built with Django and Python, you can easily find developers who will maintain it in-house. Another option is to purchase a support subscription if your team doesn't want to get involved.

Related Resources

This article was originally published in March 2017

|

Methods & Tools Software Testing Magazine The Scrum Expert |